Normals to height

Computing depth from gradients

Previously, I wrote about DeepBump, a machine learning experiment to generate normal maps from single pictures. However, when creating 3D (or 2D) content, the actual height ("displacement") of the surface might also be useful. To complement DeepBump, NormalHeight is small extra Blender add-on that computes the height from normals.

Depth-from-gradient

Going from a gradient (normal map) to the depth (height map) is often called "shape-from-gradient" or "depth-from-gradient". This has typically been used in computer vision, for instance after having found the normal map of an object through photometric stereo. In our case, DeepBump already takes care of generating the normal map from a single photo. NormalHeight then uses the Frankot-Chellappa algorithm (Eq. 22) which uses the Fourier Domain to compute a height based on normals.

Usage

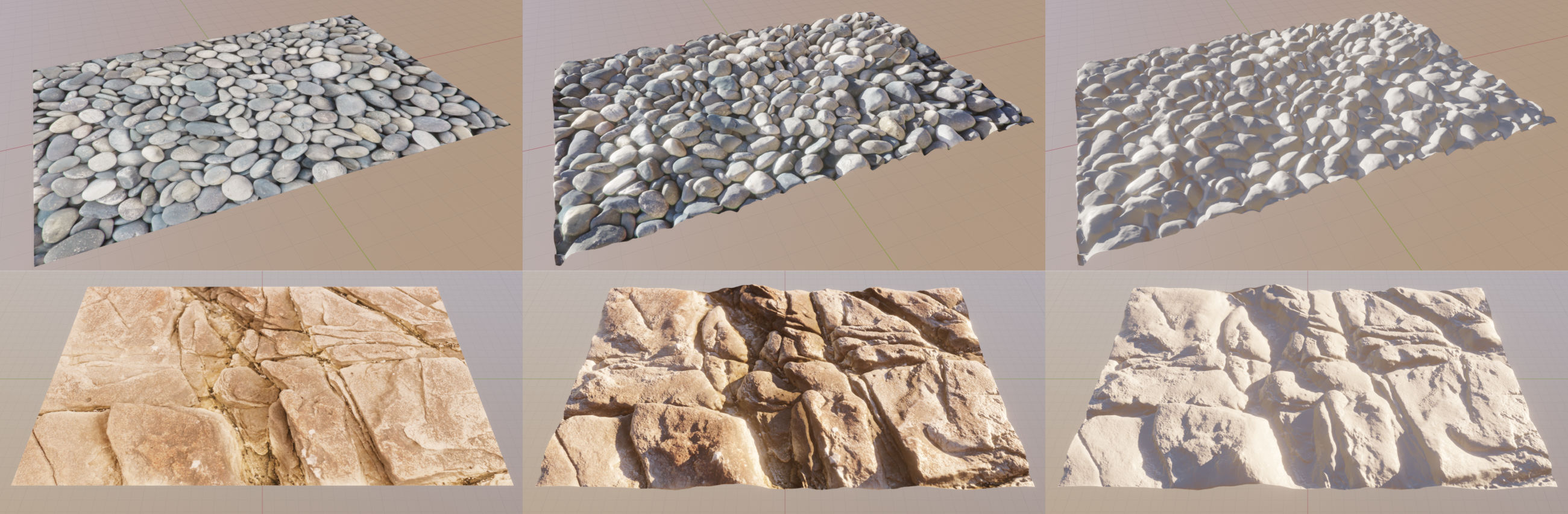

By combining DeepBump with NormalHeight, we can now get a displacement from a single photo :

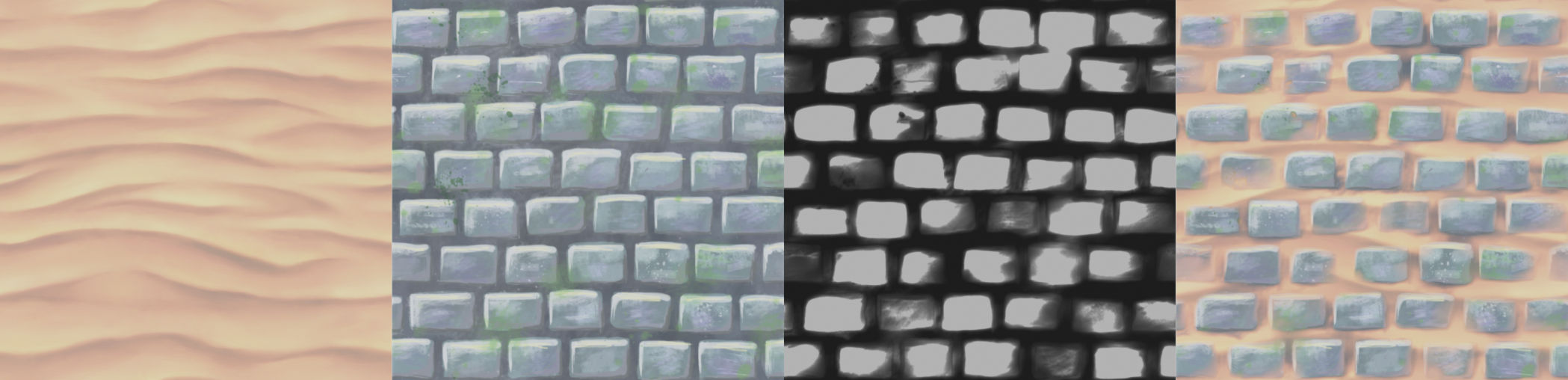

Displacement can also be used for material mixing by thresholding the generated height (3rd picture) and using it as a mask :

Limitations

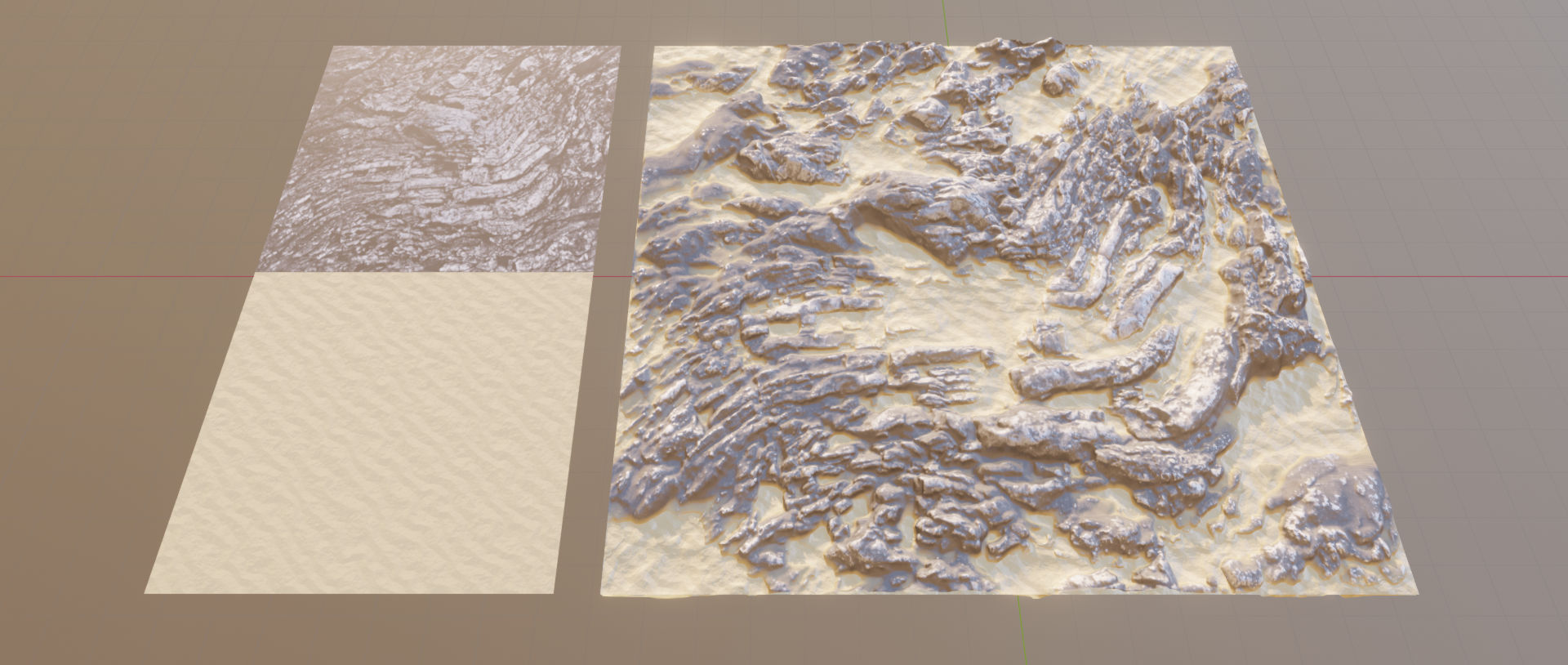

DeepBump learned to create locally probable normals, however, as the texture is processed tile by tile, on large pictures the normals might lack general structure (and thus the height map too).

To go further, we could get better results by giving the neural net more context about the whole picture rather than processing tiles independently. Moreover, we might get better height maps by teaching the neural net to predict directly the depth rather than the normals.