DeepBump

Normal Map generation using Machine Learning

Normal maps encode surfaces orientation, they're used a lot in computer graphics. They allow "faking" details without having to use extra geometry. Artists typically obtain normal maps in various ways : they might sculpt detailed geometry, then project it on a lower resolution mesh (retopology), they might generate materials procedurally (i.e. using math functions), use photogrammetry, etc.

Those techniques require some manual efforts. Sometimes you just want to quickly grab that photo and use it in your scene. Then it makes sense to try to generate normal maps from single pictures.

A simple approach is to use the grayscale image as height from which you get the normal map (by taking the slope's normal). It can give decent results on some textures, but still is a rough approximation as darker pixels don't always mean there's a hole on the surface.

DeepBump

DeepBump is an experiment using machine learning for reconstructing normals from single pictures. It mainly makes use of an encoder-decoder U-Net with a MobileNetV2 architecture. Training data is taken from real-world photogrammetry and procedural materials.

This tool is available both as a Blender add-on and as a command-line program. Check here for install instructions. Once installed, you can generate a normal map from a picture (image texture node in Blender) in one click :

Results

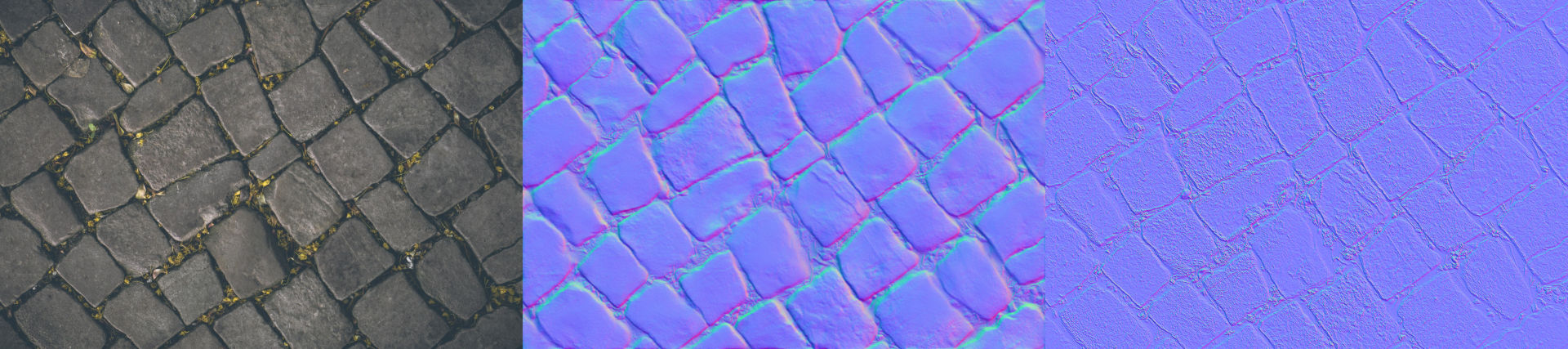

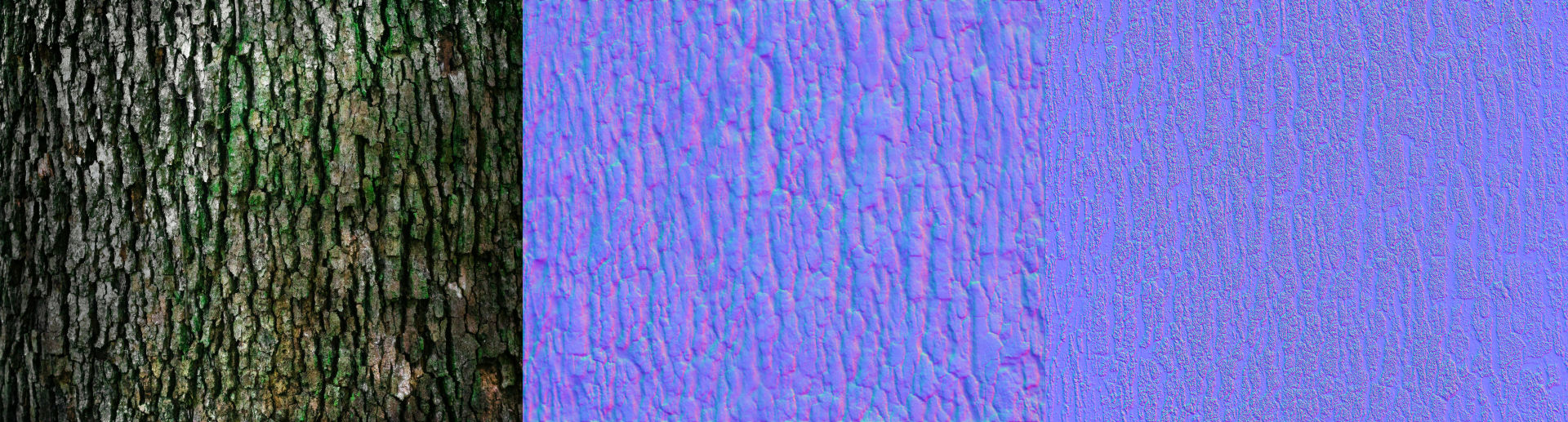

Here are a few shots of normal maps obtained using DeepBump (middle) and compared with the simple "grayscale = height" method (right):

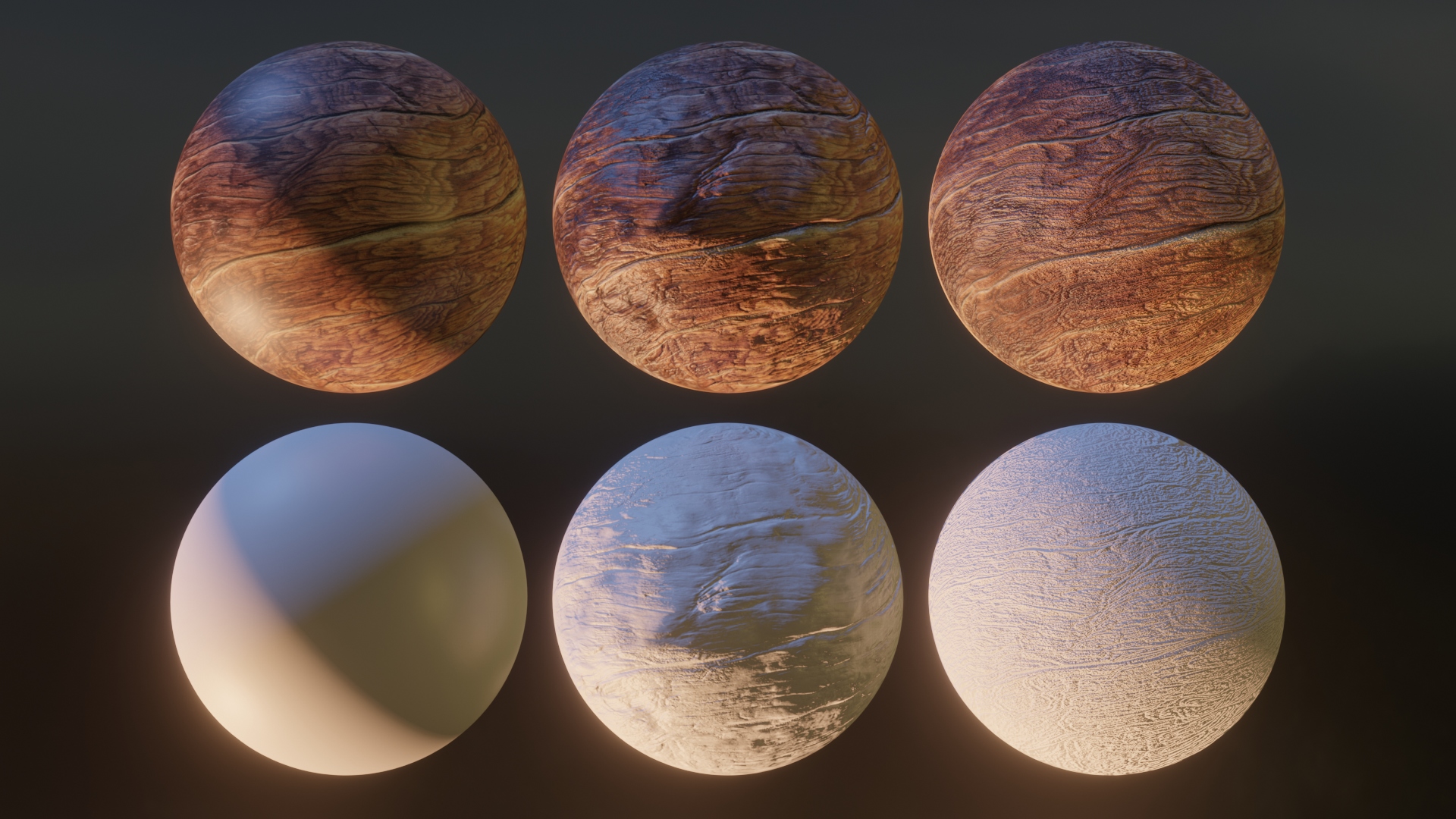

Still comparing the two methods, this time using this photo as input. We apply the normal maps and add some lights (rendered with Blender's realtime engine Eevee). Second row is without color to better visualize the normal maps :

Another example with moving lights, without (left) / with (right) the generated normal map :

In some cases, it might even work decently for some hand-painted textures :

Limitations

Despite generalization techniques, machine learning models still depend on their training data. If the input picture is very different from what the neural net was trained on, risks are the output won't be ideal, but in any case, it's just one click so it costs nothing to give it a try.

Thanks

Many thanks to the authors of texturehaven.com and cc0textures.com and to their patreons for making such high quality assets available to all without restrictions. DeepBump's training dataset is based on those. Thanks to segmentation_models for making it easy to experiment with different network architectures in PyTorch.